My Role

Audited and introduced a UX design system that improved collaboration and efficiency in a disconnected scaling team.

Company

Antwalk

Industry

Employee management

During my six-month apprenticeship at Keysight Technologies, I designed test automation workflows at scale.

I did a 6-month capstone work with Keysight at UCSC. Keysight’s test automation platform supports engineers running complex tests across distributed instruments and global labs. These workflows generate large volumes of data that teams rely on to monitor system health, debug failures, and make time-sensitive decisions. The dashboard plays a critical role in how quickly teams can act on this information.

My Team at the Keysight Office

Test automation relies on precise coordination across instruments, schedules, and global labs.

A single delay or missed signal can stall test cycles and block downstream decisions. Teams work across time zones, making real-time visibility and reliable handoffs essential. The dashboard needed to support coordination, not just data monitoring.

Engineers had data, but lacked clarity on status, failures, and next steps.

The existing dashboard surfaced large amounts of information without clear prioritization. Error states were hard to interpret, important signals were buried, and users often had to switch tools to gain context. This slowed decision-making and increased manual effort.

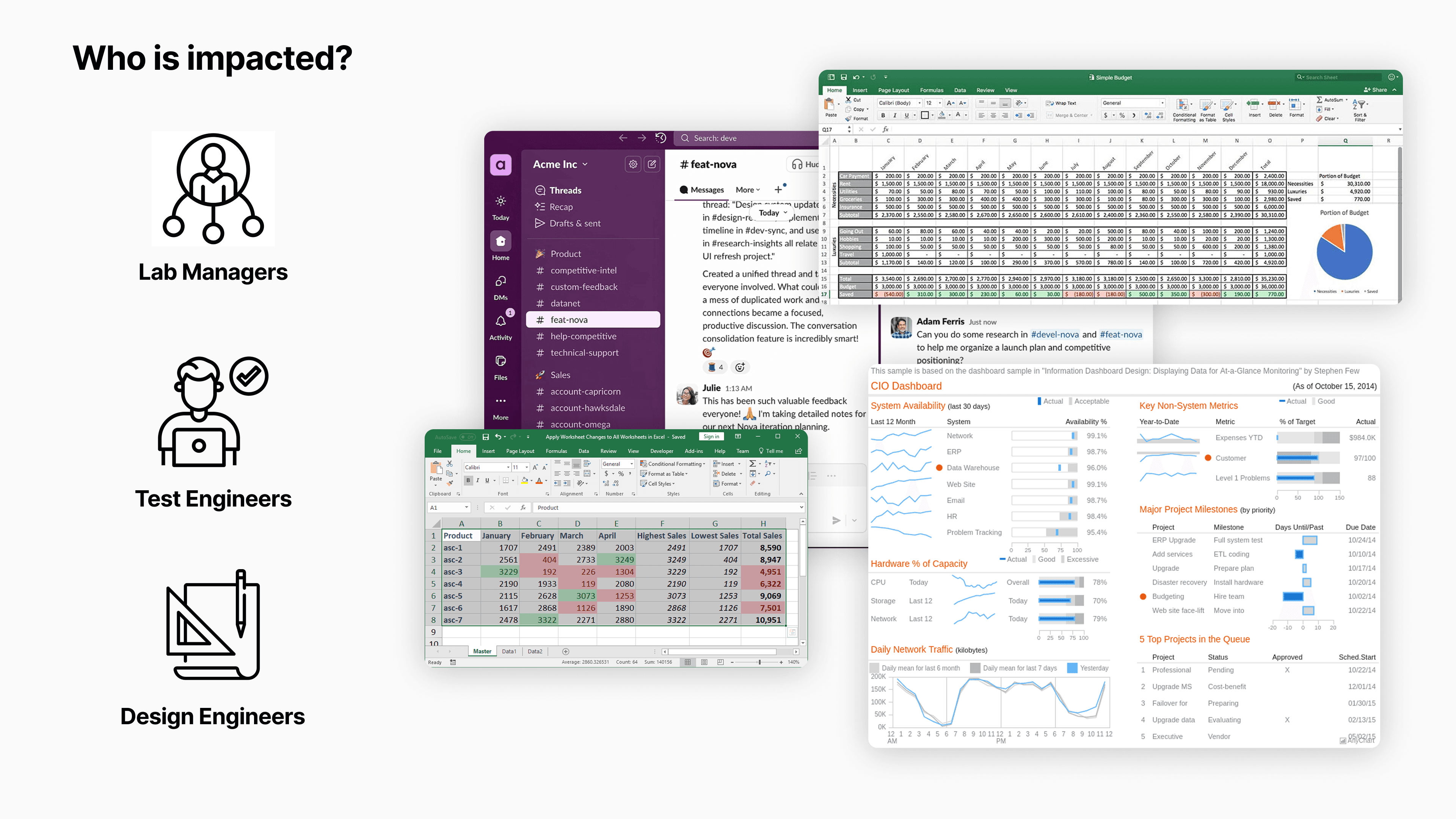

Users mentioned shifting through Excel sheets, Slack Channels, Email, Dashboards and other self made tools.

I led UX strategy and research to align the dashboard with how teams actually make decisions.

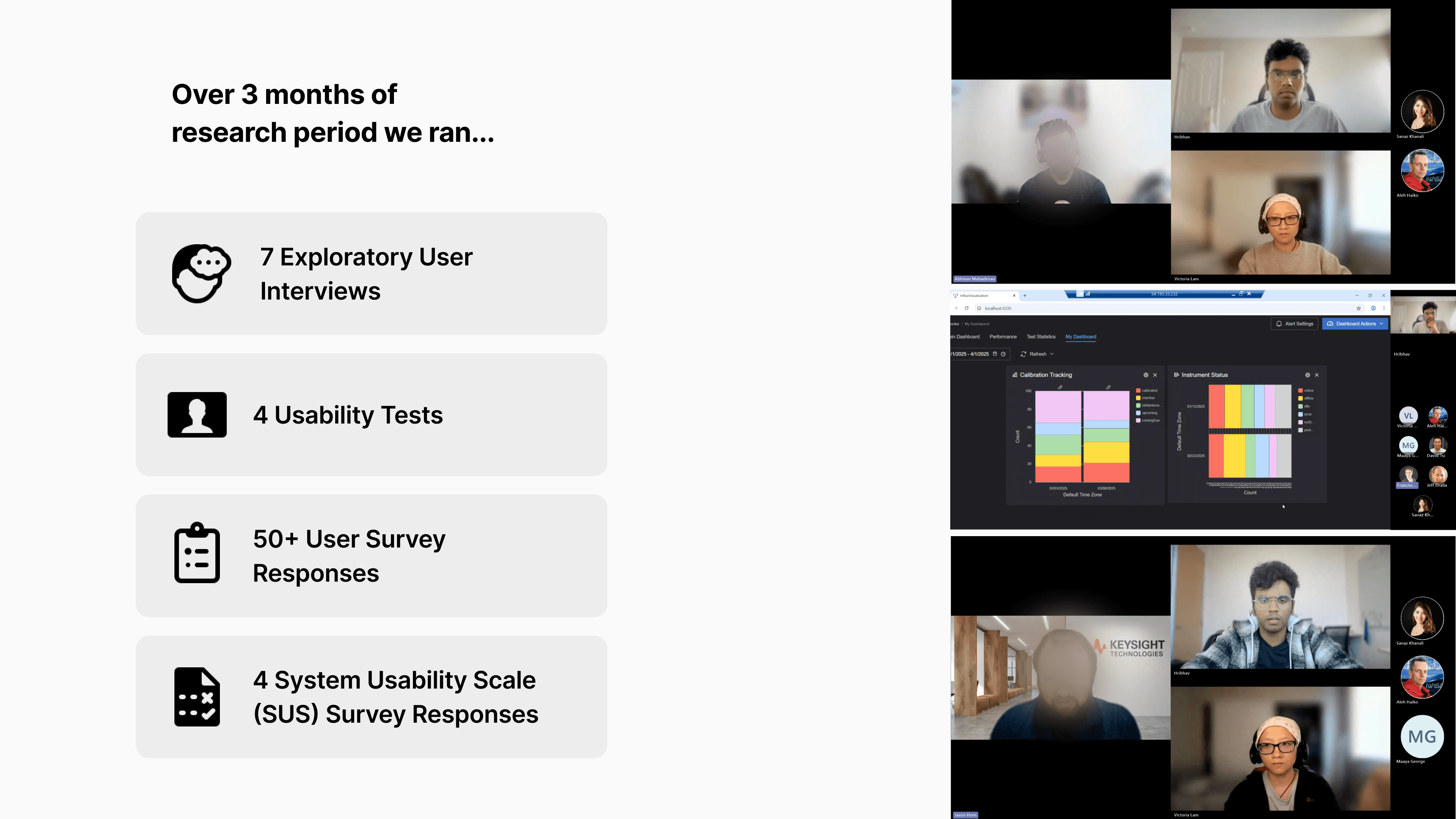

I worked closely with test engineers, lab managers, and technical leads to understand how different roles used the dashboard under real production constraints. I planned and conducted stakeholder interviews, usability testing on the existing system, surveys, and workflow walkthroughs. This research helped map role-specific goals, decisions, and friction points, and directly informed how information should be surfaced and prioritized.

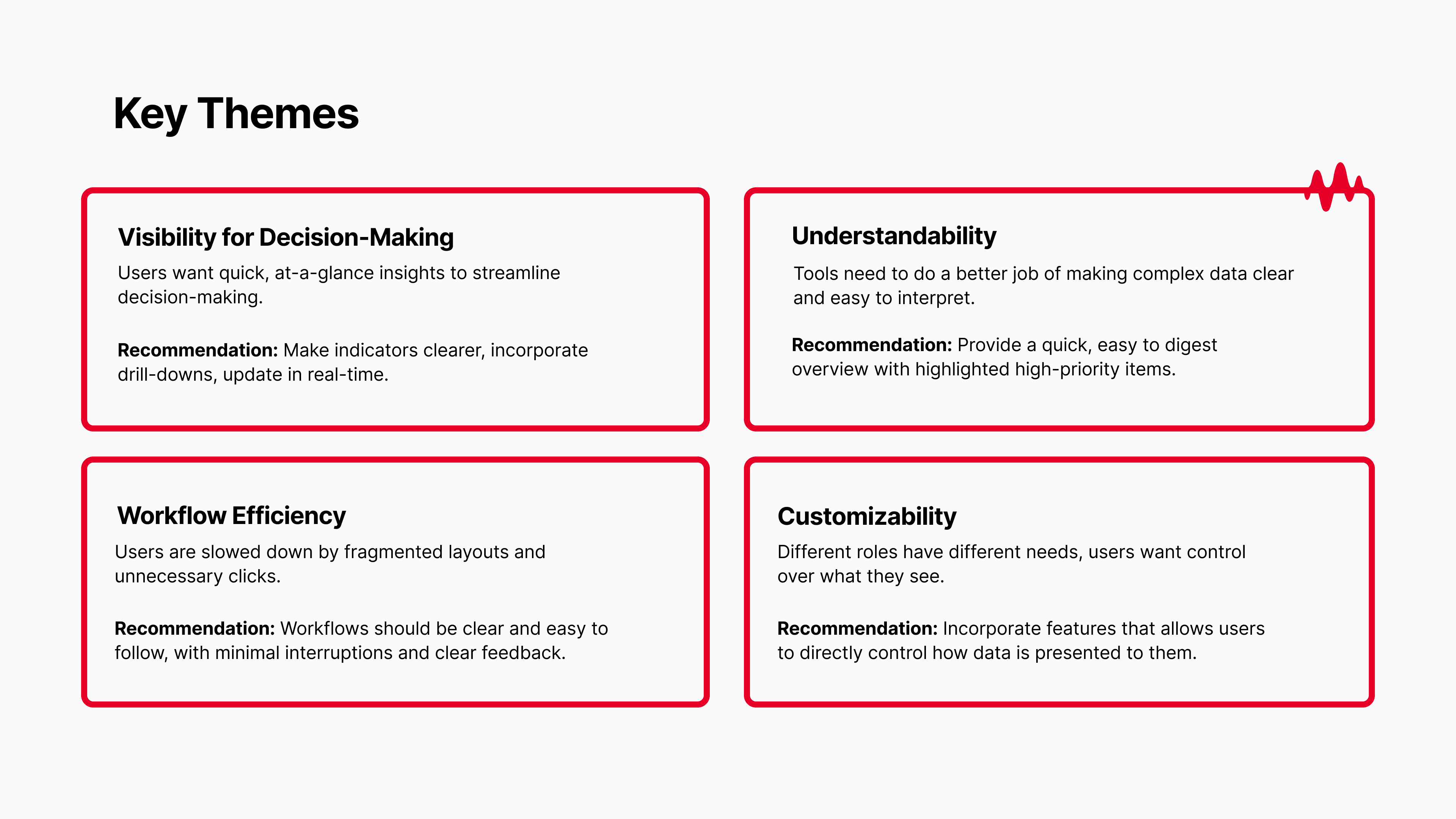

Four themes emerged: visibility, understandability, efficiency, and customizability.

Users needed better visibility into test status and failures. They struggled to understand what metrics meant and how to act on them. Workflows were inefficient, and the system lacked flexibility to adapt views by role or context.

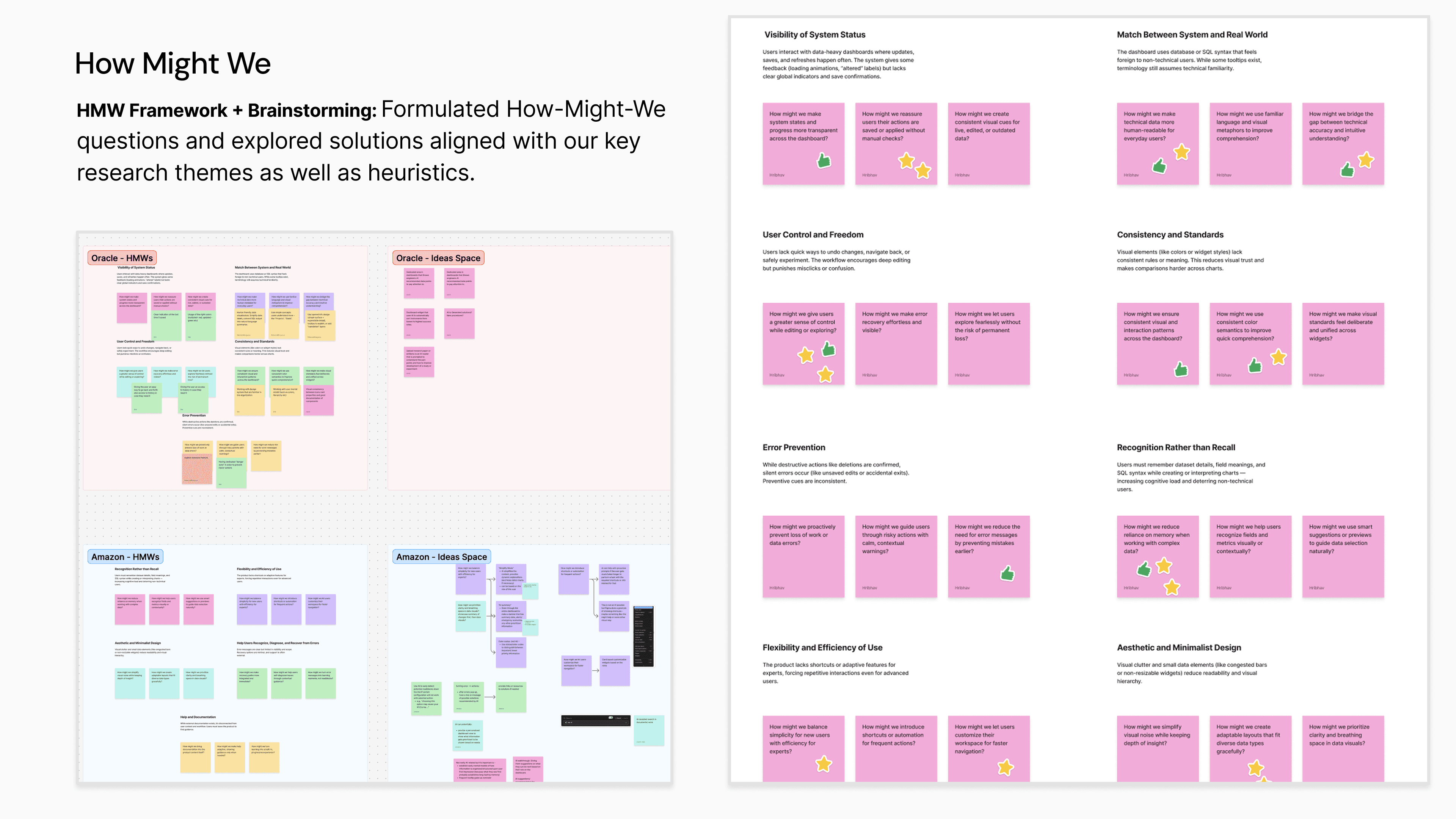

These themes guided ideation and narrowed the solution space.

We clustered ideas around the four themes to avoid designing isolated features. Ideation sessions focused on how improvements could work together across workflows. This helped us move from scattered ideas to cohesive system-level concepts.

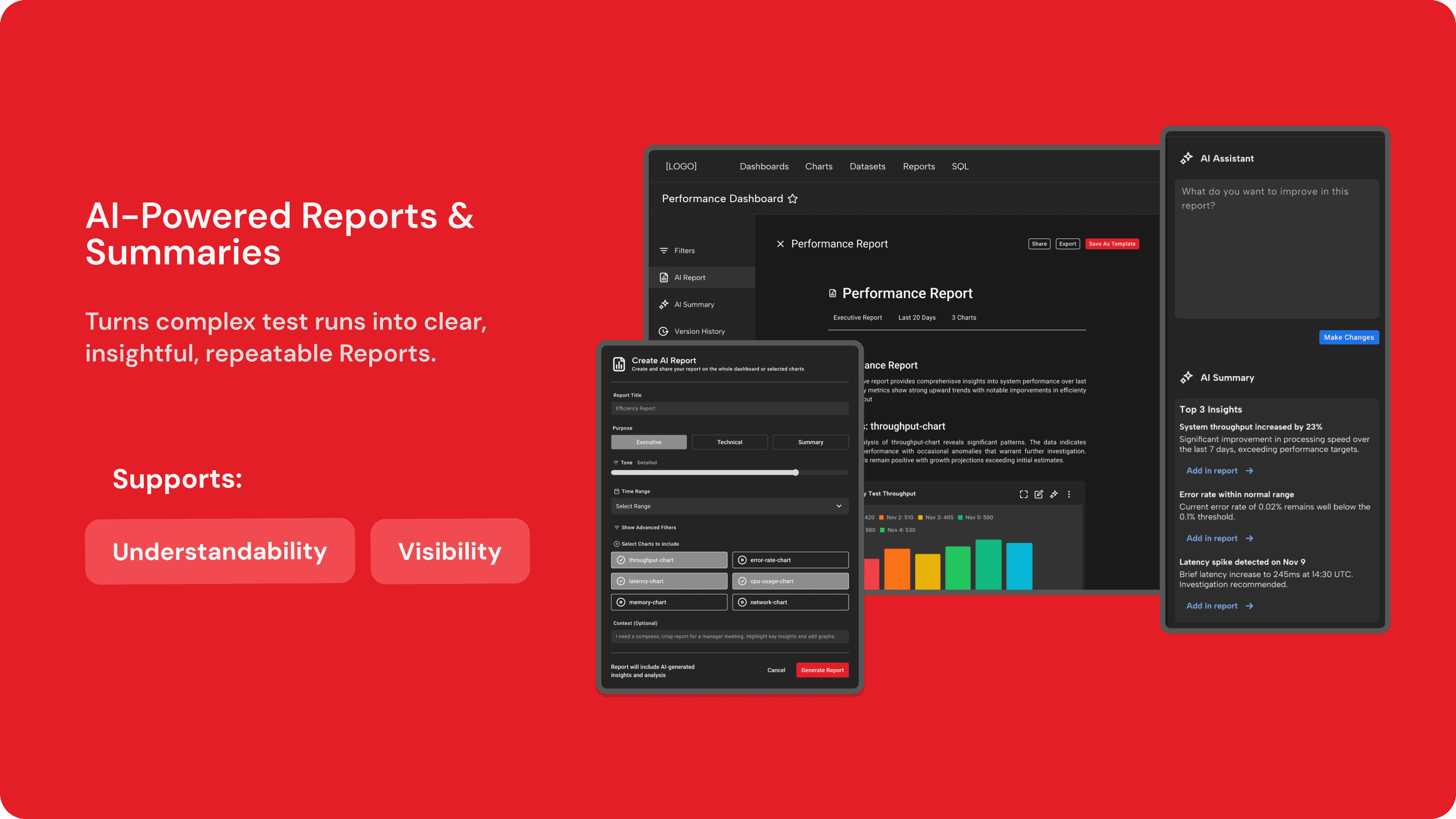

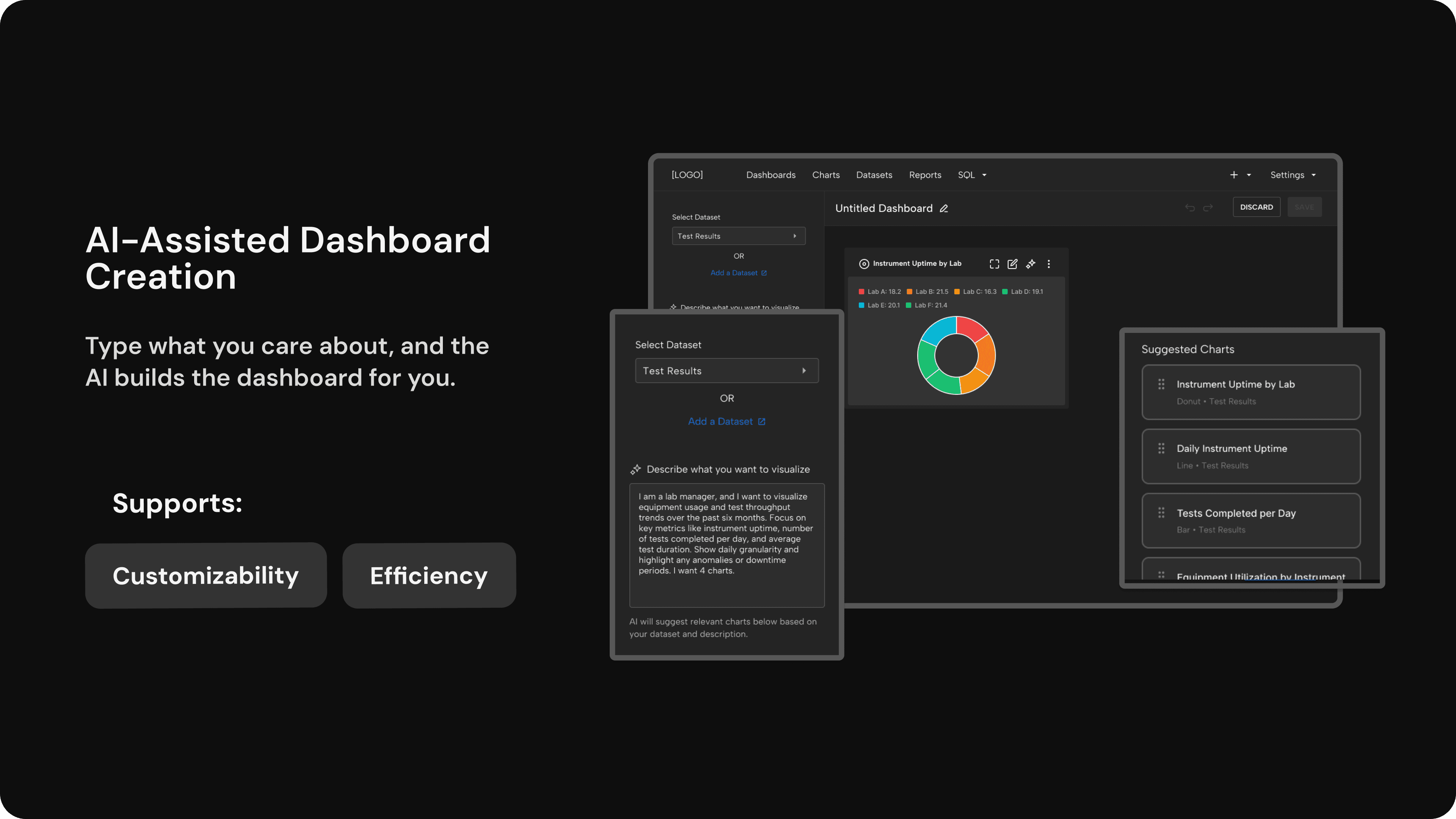

The solution centered on three AI-enabled capabilities.

Role-aware dashboards adjusted information density based on responsibility and context.

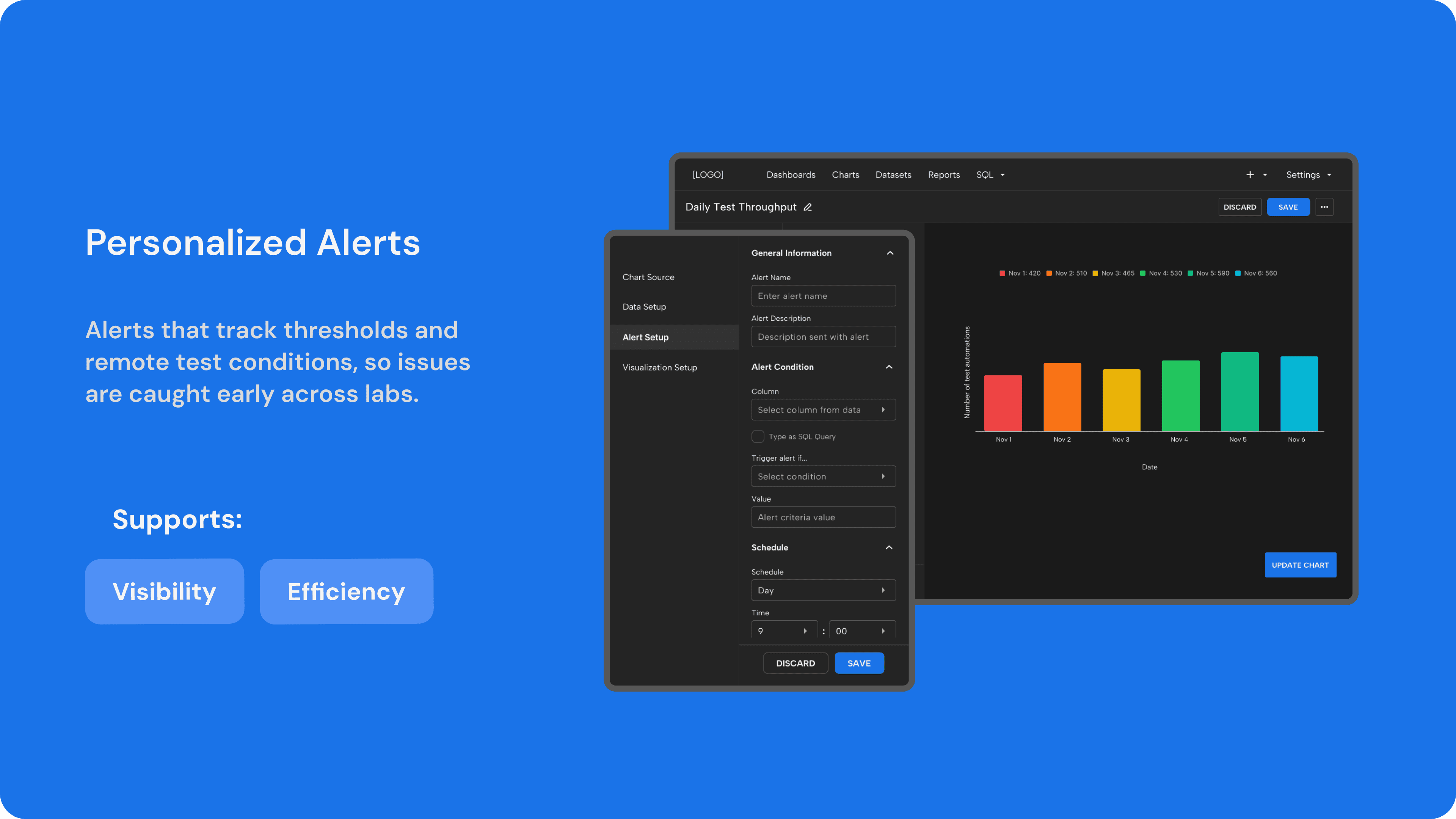

Intelligent alerting reduced noise by surfacing meaningful changes instead of raw activity.

Progressive drill-downs let users move from summary views to detailed logs without losing context.

More Projects